Large language models, often called LLMs, usually help write emails, answer questions, and summarize documents. A new neuroscience study suggests they may also hint at how your own brain understands speech. Researchers asked whether the internal steps these models use to process words match the split-second changes in brain activity that occur when you listen to a story.

For decades, many scientists believed language in the brain runs on tidy rules and symbols. In that view, sounds, word parts, grammar, and meaning sit in separate boxes. Modern AI works differently. These systems turn each word into a set of numbers that reflect context, then pass that information through dozens of layers that predict what comes next. The question was simple but bold: do those layers line up with the timing of your brain’s language circuits?

Earlier studies hinted at a match. Brain scans had shown that added context improves both AI predictions and neural signals. Yet those images lacked precise timing. This research set out to test whether the step-by-step changes inside an AI system track the brain’s moment-to-moment response as each word arrives.

To find out, scientists recorded direct brain signals from nine volunteers being treated for epilepsy. Seven had electrodes placed in areas known to support language. During monitoring, the patients listened to a 30-minute public radio story, “Monkey in the Middle,” while their brain activity was captured with millisecond detail.

The electrodes covered regions along the main language pathway. These included the middle and front parts of the superior temporal gyrus, the inferior frontal gyrus, and the temporal pole. Past work had already shown these regions respond to word meaning. This study focused on exactly when those responses peaked.

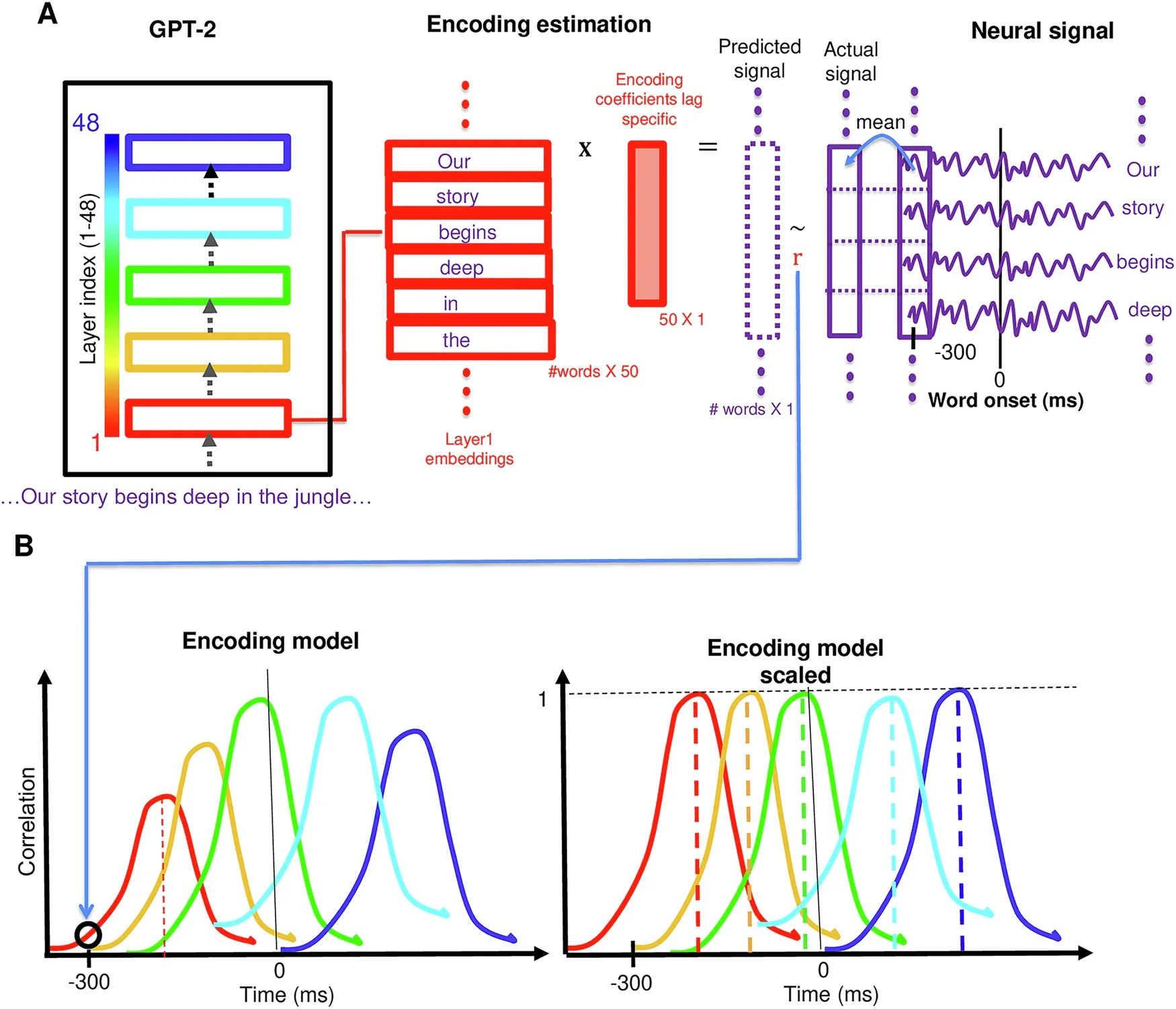

The same audio was given to two AI systems, GPT-2 XL and Llama 2. For every word, the team saved the output from each hidden layer inside the models. They then looked for links between those outputs and the brain signals around the moment each word was heard.

Each word triggered a 4-second window of neural data, starting two seconds before and ending two seconds after the word began. A simple model tried to predict the brain signal from the AI’s internal data. The researchers repeated this for every electrode, every time slot, and every AI layer. They judged success by how closely predictions matched real brain activity.

The team also divided words by how well GPT-2 XL predicted them. If the correct next word ranked first, it was labeled predicted. If it did not appear among the top five, it was labeled not predicted. This split tested whether the brain and AI align best when both “expect” the same word.

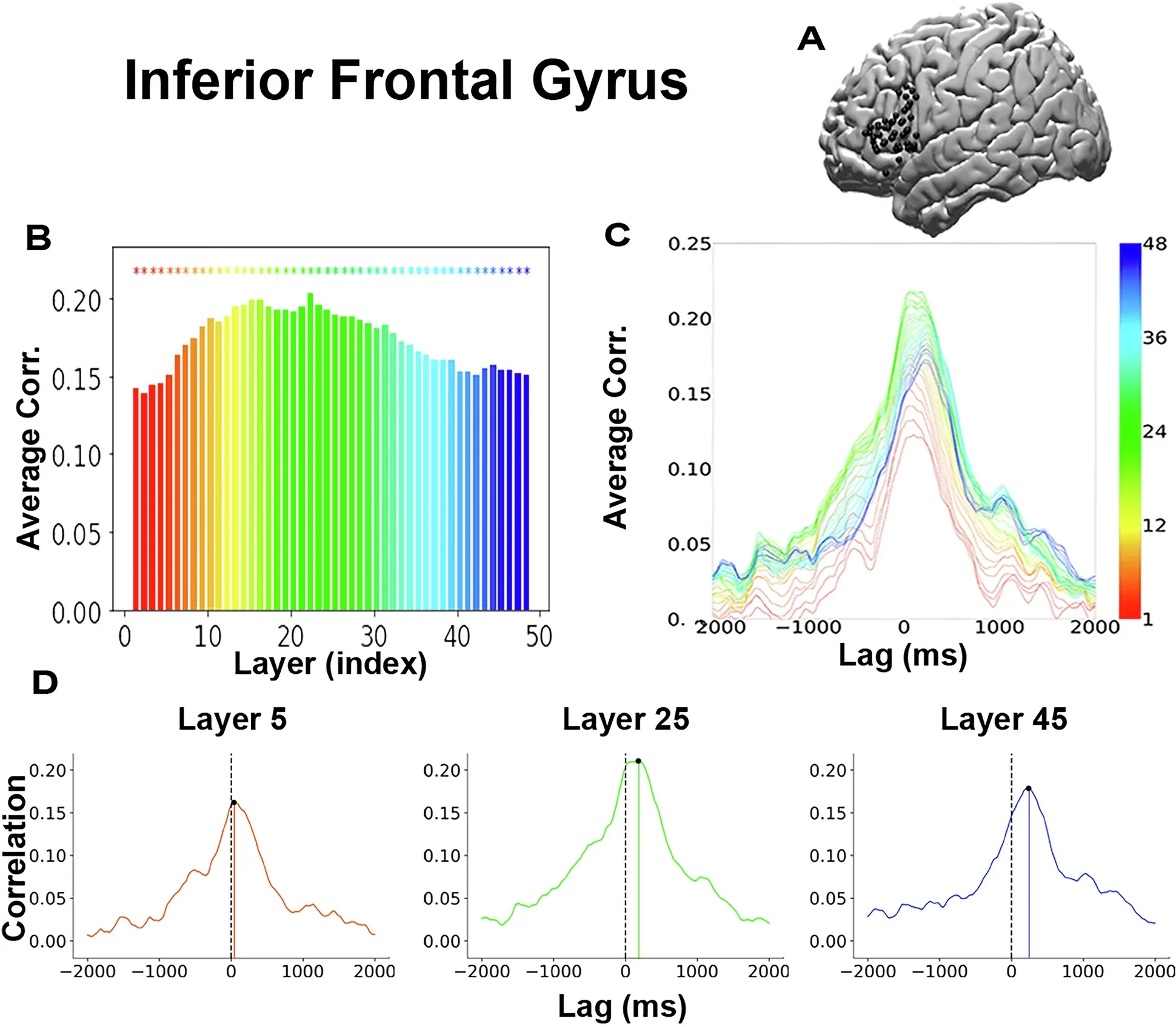

The clearest pattern appeared in the inferior frontal gyrus, a region tied to meaning and structure in speech. Each AI layer showed a burst of matching activity at a different time after word onset. Middle layers produced the strongest links overall. When plotted, the pattern formed a gentle arch with a peak in the middle layers.

Timing mattered. Early AI layers matched earlier brain responses. Deeper layers matched later ones. The relationship was tight. As the layer number rose, so did the delay in the brain’s peak response. The statistical link was strong enough that chance could not explain it.

The same pattern appeared with Llama 2, which ruled out a fluke tied to one model. Within the inferior frontal gyrus, two subregions known as Brodmann areas 44 and 45 showed nearly identical timing, which suggests both play a similar role in building meaning.

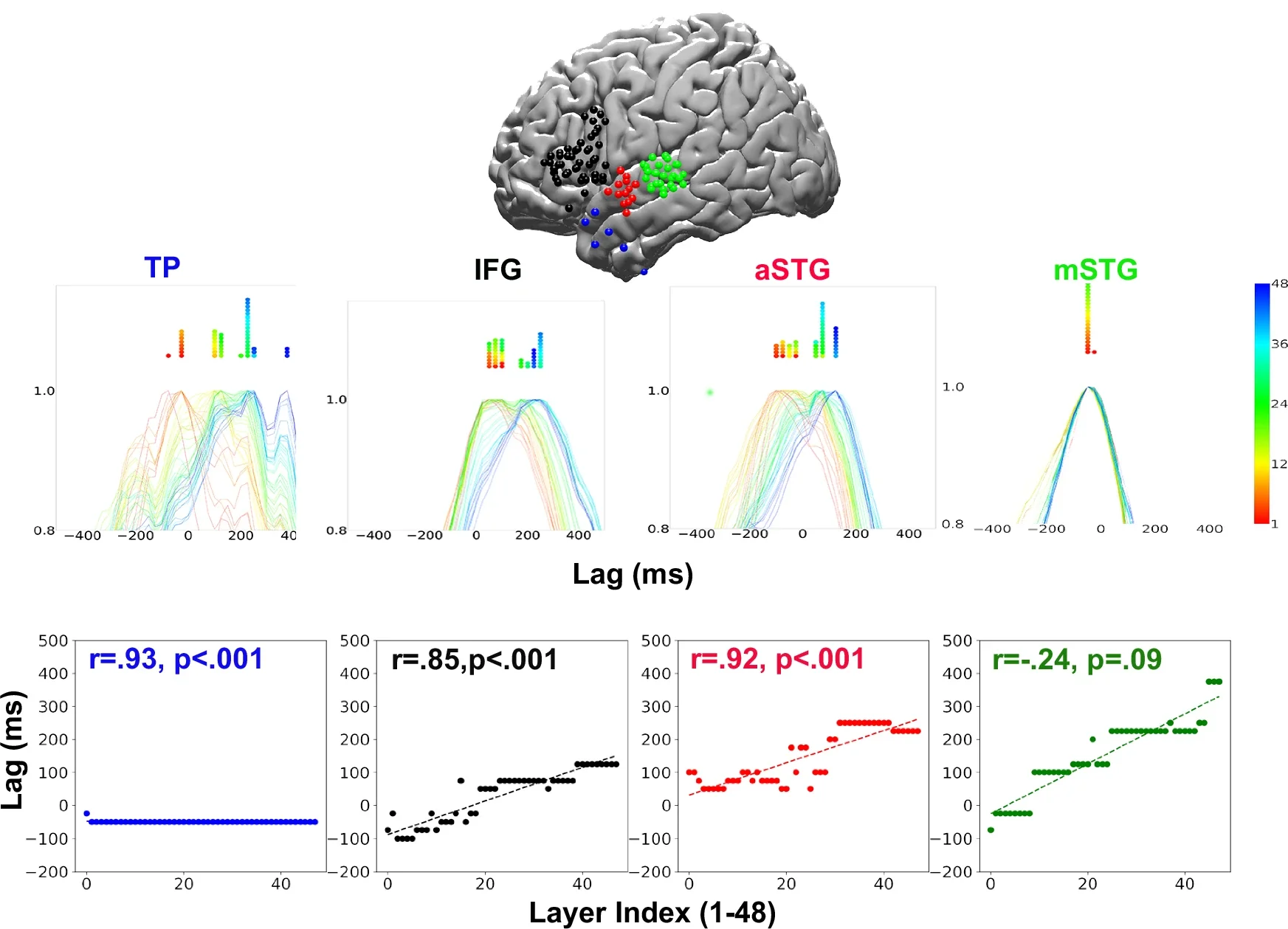

When the scientists checked other language areas, they found a gradient. In the middle superior temporal gyrus, closer to early sound processing, the timing pattern was weak. It grew stronger in the front temporal region and the temporal pole, which are linked to high-level meaning. In the temporal pole, the spread in timing stretched beyond half a second from the earliest to the latest layer match. That widening gap hints at longer integration windows as speech travels up the brain’s language circuit.

To make sure the result did not come from a simple mixing of word features, the team created fake layers by blending the first and last real layers. Those artificial steps failed to match the brain as well as the true layers did. The deep, nonlinear details inside real models mattered.

The researchers also tested classic language features made by hand. These included sound traits, word parts, grammar codes, and simple meaning vectors. Each set could predict some brain activity. None followed the neat timing pattern seen with AI layers.

Those older tools did not show a steady advance of peaks over time. In other words, they fell short of matching how the brain builds meaning moment by moment. In this head-to-head test, modern AI offered the tighter fit.

The study appeared in Nature Communications and was led by Dr. Ariel Goldstein of the Hebrew University with Dr. Mariano Schain of Google Research, along with Prof. Uri Hasson and Eric Ham of Princeton University. Goldstein said, “What surprised us most was how closely the brain’s temporal unfolding of meaning matches the sequence of transformations inside large language models. Even though these systems are built very differently, both seem to converge on a similar step-by-step buildup toward understanding.”

The team has released the full dataset to the public, including audio, brain recordings, and word data. That lets other scientists test fresh ideas on the same ground and compare results side by side.

The authors stress that AI does not learn like a child does. People learn through speech, sight, touch, and social cues. These models learn from vast text files. Yet their inner steps still echo the brain’s timing in key regions.

The work leaves open questions. Would models trained on spoken or social input match the brain even better. Could different designs sharpen the fit. And how can dense number patterns in AI link back to the categories linguists favor.

For now, the message is clear. As you listen to a story, your brain runs through a cascade of changes that look strikingly like the layers inside today’s language models.

These findings could steer better tools for treating language disorders by pointing to timing targets in specific brain regions. They may also guide new AI designs that handle speech more like people do.

Shared benchmarks can speed research by letting teams test ideas on the same data.

In the long run, closer ties between neuroscience and AI could improve learning software, speech tech, and brain therapies.

Research findings are available online in the journal Nature Communications.

Like these kind of feel good stories? Get The Brighter Side of News’ newsletter.

The post AI reveals clues to how the human brain understands speech appeared first on The Brighter Side of News.